- Joined

- May 6, 2019

- Messages

- 11,237

- Points

- 83

The NVIDIA GeForce RTX 4080 may have a TGP that's 100 watts higher than the GeForce RTX 3080 (10 GB).

Go to post

Go to post

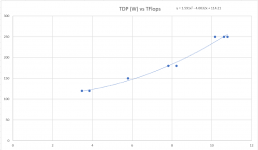

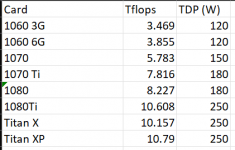

Power doesn’t tend to scale linearly with performance. It usually follows the square — it takes 4x the power to double performance.So if the TDP of the card is 33% higher than the TDP of the previous generation.... wouldn't we expect the performance increase to be greater than 33%. Otherwise All they are doing is pumping more clocks through the same ICP at the cost of more power.

From the sound of it, they'll need to do at least that. AMD apparently has a pretty large improvement in store if rumors are to be believed (even in part).So if the TDP of the card is 33% higher than the TDP of the previous generation.... wouldn't we expect the performance increase to be greater than 33%. Otherwise All they are doing is pumping more clocks through the same ICP at the cost of more power.

They can optimize the curve quite a bit, but they are limited in terms of what a particular fabrication node will allow. Node selection of course being a part of the design process itself!Just a rough thumbrule used when dealing with electrical and mechanical power - it doesn’t exactly apply to computing power, but doesn’t stray too terribly far as a general rule.

Let me introduce you to PASCAL.Power doesn’t tend to scale linearly with performance. It usually follows the square — it takes 4x the power to double performance.

Just a rough thumbrule used when dealing with electrical and mechanical power - it doesn’t exactly apply to computing power, but doesn’t stray too terribly far as a general rule.

Might need some context there. I know of Pascal, the SI unit for pressure. Pascal, the programming language, and Pascal, the mathematician who was the namesake.Let me introduce you to PASCAL.

Certaily the exception rather than the rule but it proves it can be done.

Sorry to do this to you...Might need some context there. I know of Pascal, the SI unit for pressure. Pascal, the programming language, and Pascal, the mathematician who was the namesake.

Touché - completely missed the nVidia reference.

PASCAL as in the GTX 10x0 family.Might need some context there. I know of Pascal, the SI unit for pressure. Pascal, the programming language, and Pascal, the mathematician who was the namesake.

But generally, I still stand by my thumbrule: for a given technology/node/etc - if you want to double the performance, you need to quadruple the power.

The GPU folks have moved away from TDP and now use either TGP (power just from the GPU) or TBP (total board power)Wait, what is TGP? Is that a typo or some new bs metric so you can't do direct comparisons with previous hardware?

I just have to flip a switch to get my 3080 12GB GTW3 to pull 450W.I'm already pulling more than that in my rather extreme 6900xt.

I've been thinking it, but the new standards (i.e., the single 600W connectors...) aren't really implemented and available for testing let alone for purchase.800watt GPU... Crap guess I'll need a new power... oh wait I'm good nevermind.

I've been thinking it, but the new standards (i.e., the single 600W connectors...) aren't really implemented and available for testing let alone for purchase.