- Joined

- May 6, 2019

- Messages

- 12,595

- Points

- 113

Image: NVIDIA

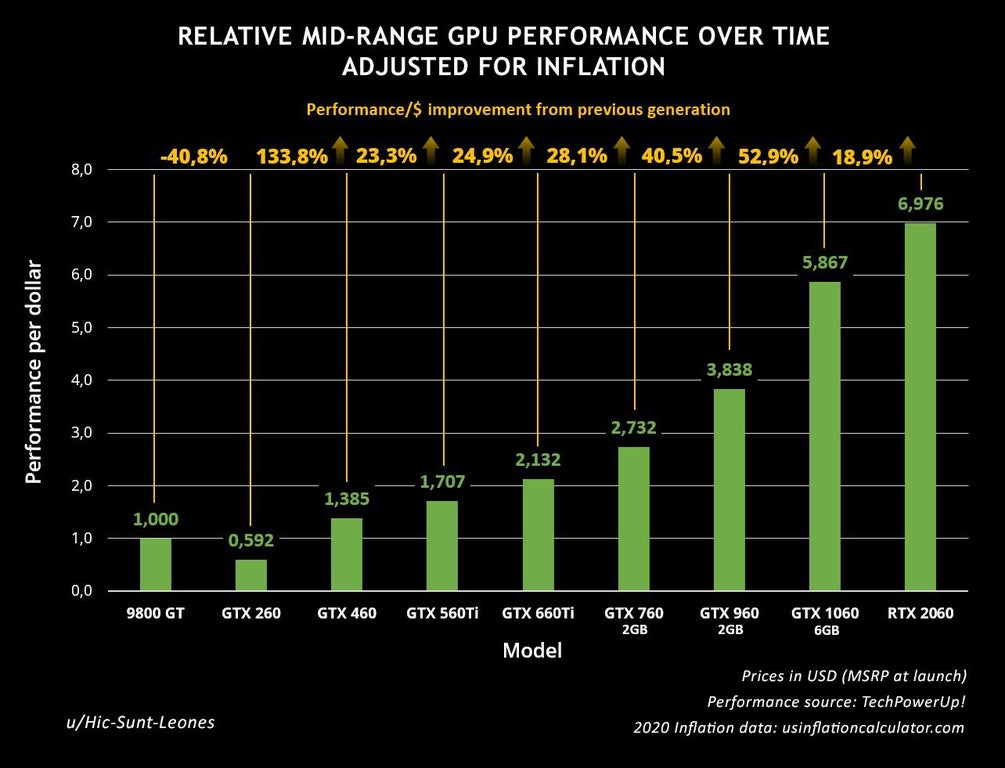

Have you ever wondered how much value you’ve truly gotten out of your mid-range GeForce cards? r/NVIDIA’s Hic-Sunt-Leones has drafted a chart that shows just how much each generation has provided in terms of performance per dollar.

Image: Hic-Sunt-Leones

Quite clearly, the biggest winner here (of modern times, at least) is the Pascal microarchitecture, whose GTX 1060 (6 GB) provided a 53-percent improvement from the previous generation, Maxwell. The biggest loser is Turing, whose RTX 2060 provided a mere 19-percent increase over Pascal.

Plenty of GeForce GTX 1080 Ti owners have held on to that card to this day, and the numbers in...

Continue reading...