- Joined

- May 6, 2019

- Messages

- 12,595

- Points

- 113

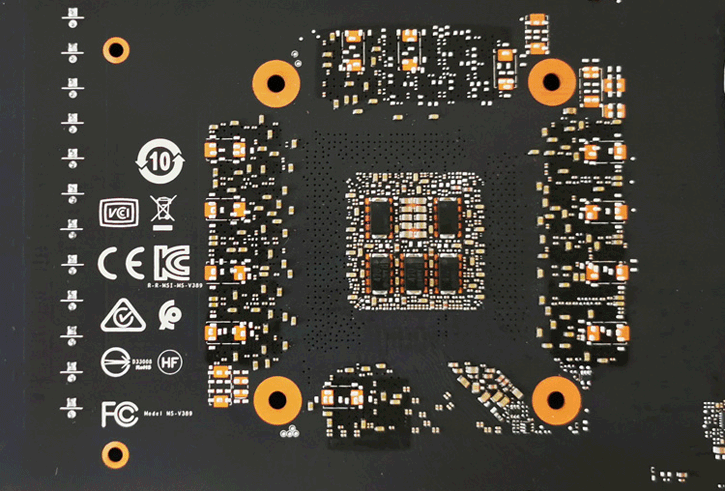

Image: NVIDIA

PCWorld’s Brad Chacos has published a story regarding NVIDIA’s new GeForce Game Ready 456.55 WHQL driver. As suggested by various user reports over the last few hours, the driver does appear to put a band-aid on the game-crashing issues that custom GeForce RTX 3080 owners have been complaining about over the past few days. But there’s a cost: reduced clock speeds.

“…with the original drivers, the [Horizon Zero Dawn] benchmark ran at a mostly consistent 2010MHz on...

Continue reading...

Last edited by a moderator: