- Joined

- May 6, 2019

- Messages

- 11,290

- Points

- 83

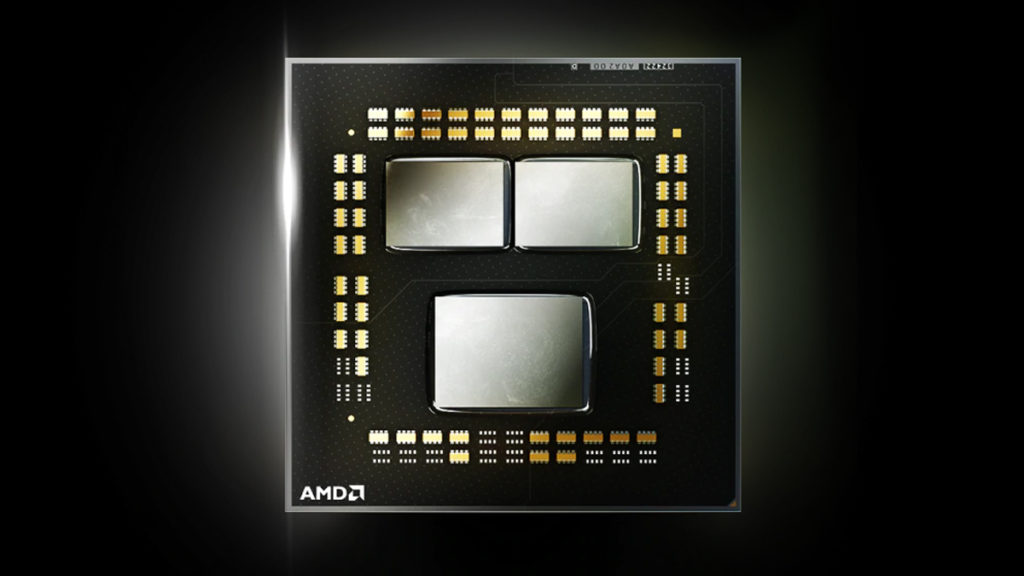

Image: AMD

AMD users who plan to build a new system around the company’s next generation of Ryzen chips may not necessarily have to worry about coupling it with a dedicated graphics solution.

Chips and Cheese has shared documents that suggest all upcoming Ryzen processors based on the Zen 4 architecture will feature iGPUs. (See the “on-chip graphics” row in the compatibility table below, which indicates dedicated graphics.)

It isn’t clear whether these iGPUs will actually be enabled in all models, but the news is good for Ryzen users who only need a relatively moderate level of graphics performance.

The documents stem from the recent GIGABYTE leak, which has revealed plenty of other interesting information such as the specifications for AMD’s upcoming Ryzen Threadripper processors.

A new block diagram shared by Chips and Cheese has created...

Continue reading...