Well, it is actually less impressive than one might expect.

It turns out, once you get up in network speeds - as opposed to what one would expect - the NIC itself is rarely the bottleneck.

I'm not entirely clear on what the actual bottleneck is, but it is not CPU, not storage, not PCIe, not memory...

I think maybe the software just isn't written with those kinds of speeds in mind, resulting in dead times, thread locks, wait states, etc.

I was able to fully max out 10gig before the upgrade to 40 gig, giving me ~1200MB/s ( I never got the full theoretical 1280MB/s, but I presume there is some NFS protocol overhead over Ethernet)

Once moving to 40Gbit, I did gain a little bit of a performance bump between the workstation and the server (the only two systems at 40gig) but I was never able to hit more than ~16Gbit between the two on a single connection, resulting in ~1800MB/s transfers.

Of course I knew to expect this going in. It's not like I didn't read up on it. The opportunity just presented itself with some cheap dual port 40Gig Intel NIC's showing up on eBay, and I was curious to test it, so I did.

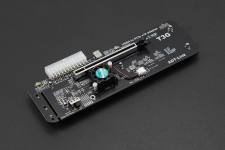

Where above 10Gig NIC's (25 Gig, 40Gig, 100Gig, etc.) really shine is in aggregate traffic to many clients at the same time. The 40 Gig connection has allowed me to reduce the networking needs to just a single 40gig link to the switch for the server, supporting all the VM's it has on it, and all the clients connecting to it. it makes it a bit simpler, and also saves power. The dual port 40Gig XL710 with two QSFP+ fiber transducer in it uses considerably less power and produces considerably less heat than the two dual port Intel x520 SFP+ NIC's I used before.

I probably didn't need to do this, because 40Gbit is a lot of bandwidth, but because both NIC's were dual port, I just connected port 0 on both the server and workstation to the switch, and connected port 1 on both the server and workstation directly to each-other for a dedicated link on a separate subnet.

This means both the server and my workstation now have all other networking either disabled (if on board) or removed (if discrete) and just use the 40gig NIC's.

It felt like the right thing to do, to minimize them stepping on each-other, but in reality just connecting the one port on each to the switch would likely have been more than enough, and I am probably not seeing any difference there.