Image: AMD

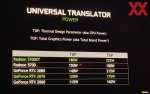

We previously shared a leak from Patrick Schur that suggested AMD’s flagship Radeon RX 6000 Series graphics card would feature a TGP of 255 W. Due to a difference in the way NVIDIA and AMD brand their total power spec (AMD uses Total Board Power [TBP], while NVIDIA uses Total Graphics Power [TGP]), Igor Wallossek has published consumption figures that give us a better idea of how much power red team’s RDNA 2 cards really use.

After figuring in...

Continue reading...