I'd want to know about CPU usage.

Nothing they're doing here is particularly hard except for muxing the 32 PCIe lanes from the eight individual drives down to 16 lanes, with those ASICs usually being pretty expensive on their own. Still nowhere near the cost of one drive, let alone eight though, I'd imagine.

But unless there's also some kind of controller on the board that implements the RAID functionality - and would hopefully have a means of configuration that would allow for the array to be bootable - but if that's not the case, then I expect that there's going to be some CPU overhead given the 'absurd' transfer speeds on tap.

And the card itself is surprising in that it simply isn't going to be a mass-market product. I expect that Gigabyte is leveraging their extensive supply chain to source the components inexpensively and that most of the 'work' beyond slapping off-the-shelf parts together was already done aside from the UI, for which they already have expertise available from the folks that do software for their other products.

So maybe this wasn't expensive in terms of sunk costs.

Who the hell is going to manage to keep one of these pegged performance wise? I mean if you look at the high speed NVME that LTT just did a test on. Basically in a day of testing the heat dissipation of it's watercooling block... they burned 2% of the drive life.

Really depends on the workload I'd think.

I'd expect LTT found the workloads that generated the most heat given the premise of their work, but I doubt that such a workload is indicative of the target audience for something like this Gigabyte drive. Or really any flash drive, as cooling flash with a water loop seems a bit absurd and potentially even counterproductive, as some flash actually needs to be warmer.

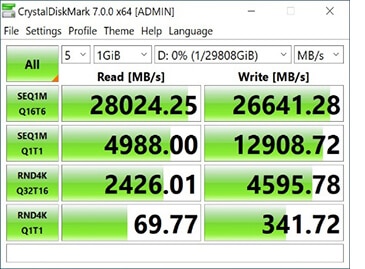

And for most prosumer workloads, heavy writes aren't generally on the table. And by heavy I mean >0.5 DWPD as a ballpark. In the CrystalBench screenshot from Gigabyte's website above, they're using a 1GB write block to test a 32TB volume... which from the write speeds reported indicates that the storage card hasn't gotten close to exceeding the cumulative write cache on its constituent drives.

Having done that myself with an Intel 660p and its first-gen QLC flash, well, if your goal is to move that much data around beyond the confines of one system, you're better off spending the budget on a real bank of enterprise spinners and a decent server and networking. Because the flash isn't going to be able to hang, not

just in terms of longevity, but even day to day performance!

Had to do a doubletake on the article title for this. I really thought that was a GPU for a moment.

I swear PNY has used a cooler like this before...