- Joined

- Apr 23, 2019

- Messages

- 1,081

- Reaction score

- 1,984

Introduction

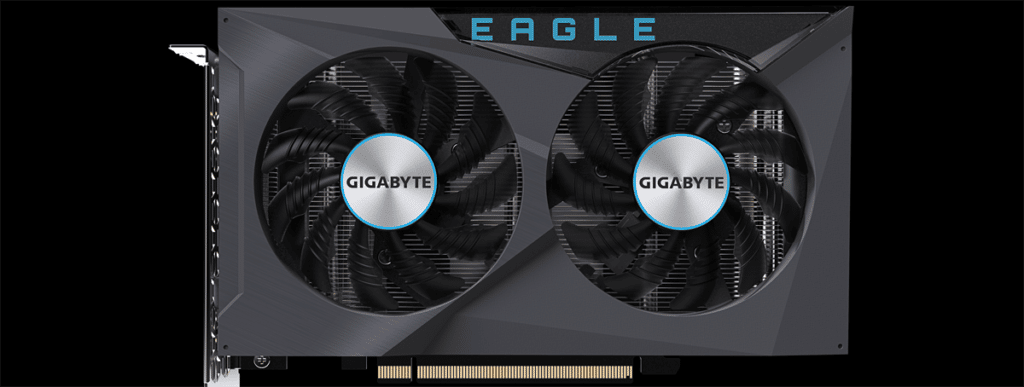

Today, AMD is launching its new Radeon RX 6500 XT GPU, which is a completely new ASIC built from the ground-up on TSMCs new N6 manufacturing node. This is the world’s first consumer 6nm GPU, and while that is surely impressive, what about the rest of the specs? Are they enough to justify the new MSRP and pricing realm we find ourselves in today for what the 6500 XT offers?

First and foremost, the AMD Radeon RX 6500 XT is AMD’s answer to the entry-level to mainstream video card level market. Think of the Radeon RX 6500 XT as an entry-level video card if you are just getting into gaming, or want to upgrade from an older video card like the Radeon RX 570 or GeForce GTX 1650. In fact, you might even think of it as a means to move from integrated graphics performance to a discrete video card level graphics performance, at least, the most cost-effective way to do so.

AMD...

Continue reading...