- Joined

- May 6, 2019

- Messages

- 12,595

- Points

- 113

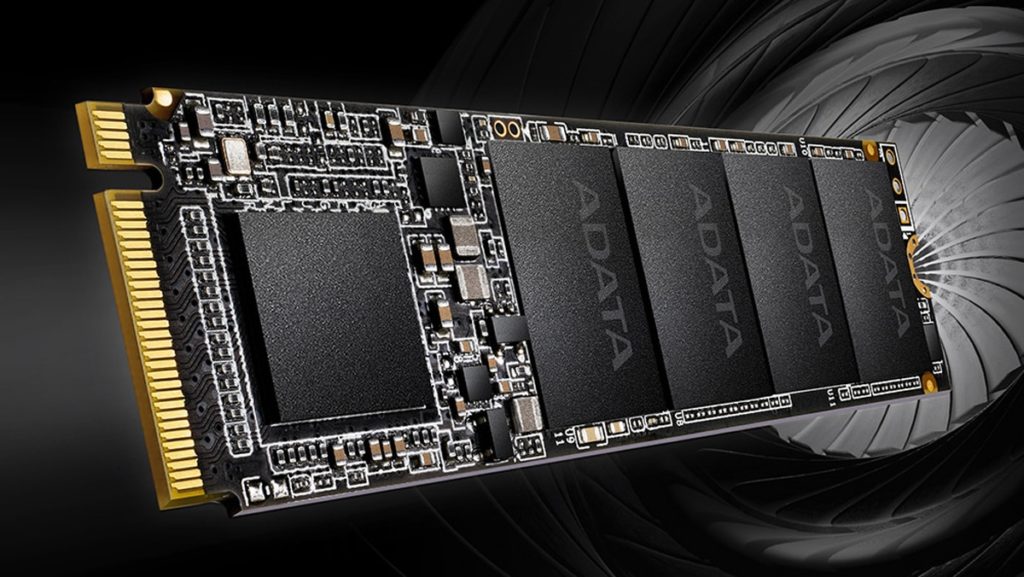

Image: ADATA

Enthusiasts with some of the latest and greatest PC hardware (e.g., 12th Gen Intel Core processors) will be able to move files around faster than ever thanks to the availability of new NVMe SSDs that leverage the next-generation PCIe 5.0 standard for blazing-fast speeds in just a few years. That’s according to storage company Silicon Motion, which revealed in a recent earnings call that its first PCIe 5.0 SSD controllers for consumer SSDs are slated for release in 2024. Silicon Motion’s SM2508 controller enables read speeds of up to 14 GB/s and write speeds of up to 12 GB/s, around double the speeds of modern PCIe 4.0 solutions.

PCIe 5.0 SSDs promising up to 14GB/s of bandwidth will be ready in 2024 (Ars Technica)

Other...

Continue reading...