Right, I know about anti-trust laws but Crowdstrike isn't a monopoly so anti-trust wouldn't apply, at least not how I understand it.

I don't think there's anything nefarious, anti-competitive or criminal going on here....more of a liability issue really. Half the world grinding to a halt because one company sent out one bad update should be concerning.

It is concerning and its not something anyone was aware was possible. It was only possible because of the way Crowdstrike works and the way the company chose to get around Microsoft's WHQL certification requirements for driver files. While Crowdstrike isn't a driver exactly, it loads a driver file which is necessary to operate in Kernel memory space and requires WHQL certification to run on servers.

I don't think the government should step in a force companies to use multiple vendors or anything but surely there could be some type of backup plan in case this happened again.

The companies affected did have backup plans. It also comes down to how Crowdstrike was able to push an update that runs in Kernel memory and bypasses company staging rules for Crowdstrike updates. This shouldn't be allowed to happen legally for both liability reasons and due to the obvious risks it poses.

Depends on how you define "monopoly" - which seems to vary depending on which industry you are in and who is in political power at any give time.

If you just use the strict definition of the word - yeah, there is competition for CrowdStrike, so there are options.

But...

Commanding a sufficiently high percentage of the marketplace would imply there is not meaningful competition and, even though competitors exist, they do not have a meaningful economic impact and/or are not viable competitive choices

You know - kinda like Microsoft has been with Windows and Office

This isn't true. There are plenty of alternatives out there as evidenced by the fact that about half the world's systems were unaffected. Symantec, McAfee and other options all still exist in the space and are used by corporations all over the world.

Crowdstrike has simply been a juggernaut that has increased its market share spectacularly in the last few years. It owes this to being really good at what it does. Unfortunately, what makes it work so well is also responsible for creating this problem. If they had to go through more validation and full WHQL certification for its update files, it wouldn't be able to keep up with and protect against new threats as quickly as it does.

Just to make it clear here, the reason why this happened is that Crowdstrike has to operate in Kernel memory space in order to offer the level of protection that it does. In order to bypass stringent WHQL certification for every update that Crowdstrike does, it essentially has a fixed driver.sys file that loads in Windows\System32\drivers\Crowdstrike\ and stores "definition files" with it that it calls upon. That's the rub. They don't update the actual driver.sys file that goes through WHQL certification. They only add definition files that the driver calls on.

They also have the option to push these updates out in a way that bypasses staging rules which is why development, test, QA, and any other non-Prod systems crashed right along side production servers.

Basically, Crowdstrike can update with impunity and the worst part is, that by itself wouldn't have caused an issue had the C-00000291-00000000-00000029.sys file actually contained the code it was supposed to. When opened up, that file only had zeroes in it. Given that it causes a bluescreen almost 100% of the time, its clear the update had never been tested by Crowdstrike prior to deployment. Whatever means they use to generate those update files failed spectacularly, and they obviously did zero internal testing or the Crowdstrike devs would have seen the problem for sure and not pushed the update.

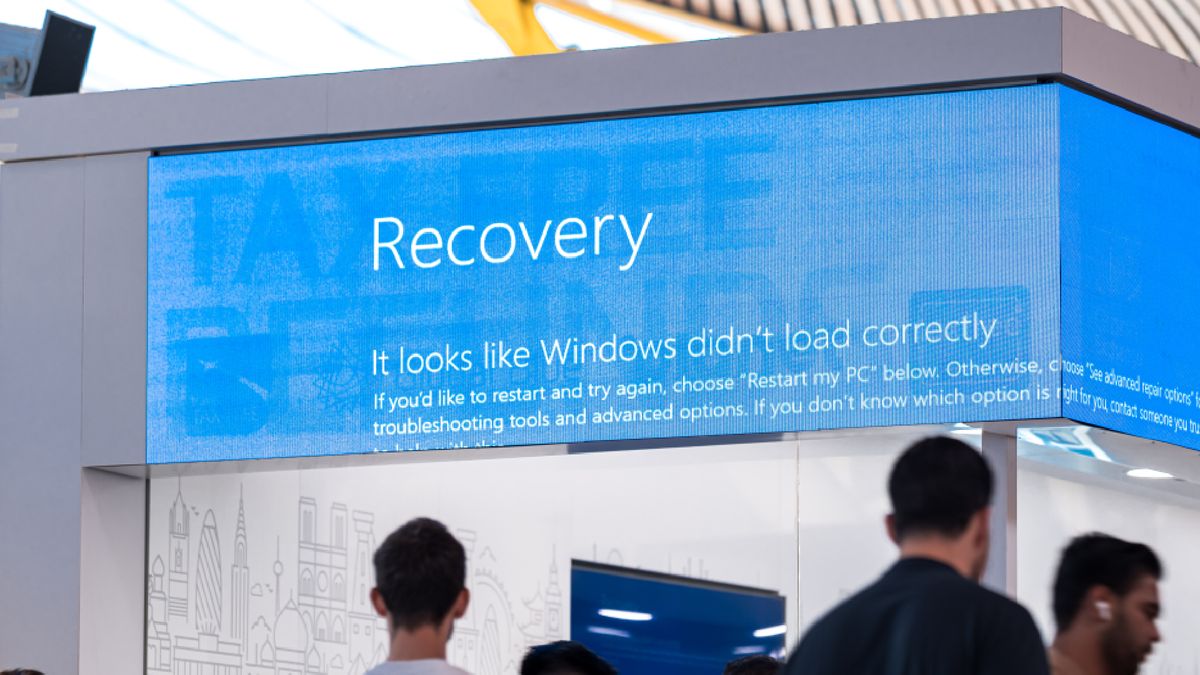

And for those who haven't seen other posts I've made on the subject, the reason this was so bad is that it left the servers stuck at an OS recovery screen because they couldn't boot up normally. They could be started in safe mode or safe mode with networking. You either had to go up to physical servers that had a console, KVM or use a crash cart or you had to ILO/iDRAC or whatever into them via a browser (after fixing any Citrix environments, or remote desktop servers) and then deal with a very slow and unresponsive system.

You had to fix servers that may have held your bookmarks for your ILO / iDRAC addresses or vCenter addresses for any other tools you may have needed. For larger corporations and secure environments, one does not simply have an administrator level account that you use for logging into servers. You typically have to use a check in/check out system for privileged accounts. Systems that weren't working until you fixed them first. If you had issues with your domain controllers, you wouldn't use safe mode with networking support to login and delete the offending Crowdstrike file. You had to use a local administrator account, which you wouldn't have had the password for.

I can go on and on about this, but the point I'm making here is that none of a companies redundancies mattered in this case and the more secure your environment was, the harder it was to fix. Small businesses tend to trust their IT workers more and give them more access. They tend to have easy access to privileged user accounts and less complicated infrastructure. If you didn't know the address to your vCenter in your head, you may not have had a bookmark to reach it.

Smaller IT shops also don't employ things like virtual desktops on remote servers, or layered access were you have to connect to a server to access the whole environment. Secure environments rarely let you just go from your desktop or laptop straight to the servers. You usually have a "jump server" of sorts to get to them. This increased complexity had to be overcome and these systems fixed before you could fix customer facing systems and services in earnest.

In nearly 30 years of IT work, this is the biggest clusterfuck I've ever seen bar none. Mark my words. There will be some sort of regulation or law that comes out of this to try and prevent a company from running its products in this manner. The problem with that is, we will probably have less protection from attack vectors should that happen.

That said, I think this was an eye opener for some IT organizations as well. They will probably have to start re-thinking how they handle disaster recovery and redundant datacenters to some extent. And, depending on some other factors in the case of redundant datacenters, some organizations suffered much more than others.

For people outside of certain industries and outside of the IT industry itself, what has been said by the media downplays how bad this really was. The fact this took place on Friday and gave organizations a weekend to restore services lessened the perceived impact by the public. Again, it was truly a lot worse than most people know.