- Joined

- May 6, 2019

- Messages

- 13,407

- Points

- 113

NVIDIA has released a set of benchmarks that provide an early idea of how its GeForce RTX 4080 graphics cards might differ from one another.

Go to post

Go to post

Yeah, but you can get one of those on an Arc for ... considerably less, and just use that as a second card just for compression. If you trust Intel drivers to work, that is.If you want to stream or if that enters a value proposition for you full av1 encoders are nice on the Nvidia cards.

Might be cheaper to get an AMD AM5 CPU... I'd say wait for 13th-gen, but that isn't going to get new IGP tech. Maybe Intel will have it for 14th-gen.If you want to stream or if that enters a value proposition for you full av1 encoders are nice on the Nvidia cards.

Pretty much coming to this conclusion as well. I do think that Intel's transcoding drivers work, at least if they work as well as they do on the IGPs.Yeah, but you can get one of those on an Arc for ... considerably less, and just use that as a second card just for compression. If you trust Intel drivers to work, that is.

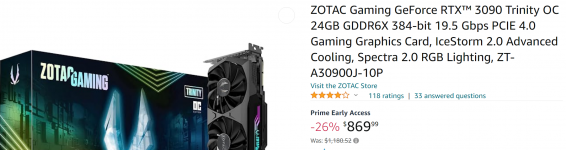

I was actually looking into buying a 3090 just now, but decided I don't really need it. For $870 I'd probably change my mind but it still costs significantly more here in Europe.Ok so the graph is ... interesting.

I'm going to go out on a limb and say the lesser 4080 roughly matches the current 3090 (non-Ti).

I can find a 3090 right now for less than the $899 MSRP of the 4080 Lesser Edition, and I can get that right now. Sure, you don't get DLSS 3 (or will you?), and I bet the Ada will probably still raytrace a bit better, but you do get double the VRAM...

Trying to decide how I feel about that. On one hand, it's nice that the performance has jumped as it has. On the other... the prices jumped right along with it... so I don't feel like we really gained anything on the price/performance curve on this generation - just performance.

And sure, that doesn't sound like such a bad tradeoff, especially if you agree that Moore's Law is dead and we should be seeing the prices go up; that price vs performance is a meaningless metric. But I would say - what about the mid and low tier cards that just didn't exist last generation. If the new 4070 starts out at $899, and that used to be a $350 tier relative to performance in a given generation; have we just priced out a large part of our already niche marketplace?

View attachment 1939

Yep, I posted about this one in another thread on the 1st day of prime early access. Yesterday I even saw a Zotac Amp Extreme 3090 Ti for $999.Ok so the graph is ... interesting.

I'm going to go out on a limb and say the lesser 4080 roughly matches the current 3090 (non-Ti).

I can find a 3090 right now for less than the $899 MSRP of the 4080 Lesser Edition, and I can get that right now. Sure, you don't get DLSS 3 (or will you?), and I bet the Ada will probably still raytrace a bit better, but you do get double the VRAM...

Trying to decide how I feel about that. On one hand, it's nice that the performance has jumped as it has. On the other... the prices jumped right along with it... so I don't feel like we really gained anything on the price/performance curve on this generation - just performance.

And sure, that doesn't sound like such a bad tradeoff, especially if you agree that Moore's Law is dead and we should be seeing the prices go up; that price vs performance is a meaningless metric. But I would say - what about the mid and low tier cards that just didn't exist last generation. If the new 4070 starts out at $899, and that used to be a $350 tier relative to performance in a given generation; have we just priced out a large part of our already niche marketplace?

View attachment 1939

But then they'd be selling a x070-series card for US$899, so...The lesser model really should have been a 4070.

It's moronic of them to muddy the waters with these stupid confusing model sames.

But then they'd be selling a x070-series card for US$899, so...

I disagree, the lesser model is a 4060ti and the top one is a 4070 in disguise.The lesser model really should have been a 4070.

It's moronic of them to muddy the waters with these stupid confusing model sames.

Another part of that is:But then they'd be selling a x070-series card for US$899, so...

You beat me to it by seconds!They are going to rename the 12GB variant https://www.nvidia.com/en-us/geforce/news/12gb-4080-unlaunch/

Better they do it than the entire community ridicule them for it.You beat me to it by seconds!

"The RTX 4080 12GB is a fantastic graphics card, but it’s not named right. Having two GPUs with the 4080 designation is confusing."

All I can think to say about the news from NVIDIA is "priceless".

The entire community already was ridiculing them for it, which I assume played a role in their decision to "unlaunch" the card.Better they do it than the entire community ridicule them for it.

I disagree, the lesser model is a 4060ti and the top one is a 4070 in disguise.

Seems they left a lot of room for better 4080's, as to be able to react to RDNA3 or something.