- Joined

- May 28, 2019

- Messages

- 10,189

- Reaction score

- 7,124

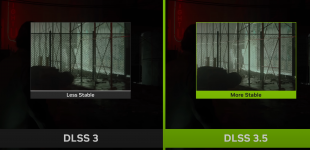

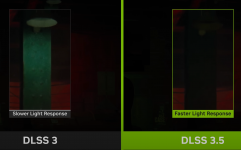

NVIDIA DLSS 3.5 was announced at this year's GamesCom 2023 opening night and is said to arrive this coming fall. The next iteration appears to have a focus on a new feature called Ray Reconstruction (RR) to provide significantly improved visuals when using ray tracing effects. RR is a type of denoiser and is said to have been trained on five times more data than DLSS 3. According to VideoCardz denoisers often create graphical anomalies due to a lack of data, but also strip away data that is needed for upscaling while also reducing color data.

See full article...

See full article...

/cdn.vox-cdn.com/uploads/chorus_asset/file/24865477/6oJYIxB.png)