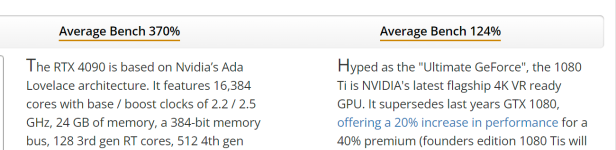

The GeForce RTX 4090, NVIDIA's flagship gaming GPU, is worth no more than $700, according to screenshots shared online by Micro Center customers that show how the retailer is offering as little as $699.95 for custom versions of the GPU as part of its trade-in program, which allows old hardware to be turned into instant in-store credit.

See full article...

See full article...