Grimlakin

Forum Posting Supreme

- Joined

- Jun 24, 2019

- Messages

- 10,220

- Reaction score

- 6,605

Gemini:

Faraday

You have to remember when you're using the AI integration in google it isn't building a conversation but giving you a one-off answer. When I do it in Faraday or Gemini, it's part of a conversation so it remembers it's previous answers (up to a limit) and tries to vary it's responses. Note how Gemini tried to include emotional context for it's picks.

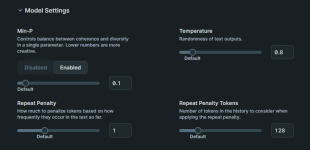

Faraday

You have to remember when you're using the AI integration in google it isn't building a conversation but giving you a one-off answer. When I do it in Faraday or Gemini, it's part of a conversation so it remembers it's previous answers (up to a limit) and tries to vary it's responses. Note how Gemini tried to include emotional context for it's picks.