Zarathustra

Cloudless

- Joined

- Jun 19, 2019

- Messages

- 3,748

- Points

- 113

So,

I have been low key researching and mulling this over for years now, and I finally pulle3d the trigger a couple of weeks ago.

Wound up getting:

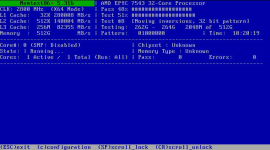

- Epyc 7543 (Milan, 32C/64T, 2.8Ghz Base, 3.7Ghz Boost) used from tugm4470 on ebay (this seller is highly regarded on Servethehome)

- Supermicro H12SSL-NT-O - new on Amazon

- 8x Hynix 64GB Registered DDR4-3200 - used on ebay from atechcomponents which seems to be a well rated seller

- Supermicro SNK-P0064AP4 (92mm Socket SP3 CPU cooler for 4U Cases) - new on amazon

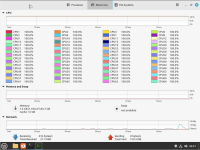

I'm going to be doing some bench-top testing over the next few weeks before I take down the old server and go for the upgrade.

Did some basic assembly last night:

This is the part that no matter how many times I do it on a Threadripper (and now EPYC) always scares the **** out of me:

4094 hair-fine pins that unlike some others are irreparable if touched.

With my lock I'd drop the CPU on them, which is probably why they include that nifty secondary plastic cover.

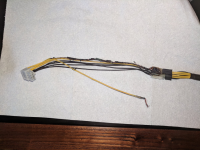

And a few minutes later. I wanted to do some benchtop testing last night, but apparently I no longer have a good PSU in my spare parts bin. I'm going to have to yank the one out of my testbench machine, but I just didn't feel like doing it last night.

I'm a little bit concerned about the clearance behind PCIe slot Slot three with those weird Supermicro plastic M.2 clips sticking up, but I'm hoping it will be fine. I'm also considering putting a couple of slim heatsinks on those m.2 drives to ensure they don't get too toasty, but the Amazon store page says they only stick up by ~3mm, so hopefully I'll be OK. Those are going to be my mirrored boot drives (Using ZFS). I'm going to be using at least 5 maybe 6 of those slots, so they can't be blocked. This depends on if I can get the funky SlimSAS ports to run my Optane u.2 drives. If I can, then I don't need my u.2 riser card. 3 of the slots will have 16x cards, the rest 8x cards.

It's a little weird to see such a petite CPU cooler on a 225W TDP CPU, but we are not overclocking here. As long as we stay under TJMax at full load I'll be happy. Even better if I can get the full advertised boost out of it.

I have been low key researching and mulling this over for years now, and I finally pulle3d the trigger a couple of weeks ago.

Wound up getting:

- Epyc 7543 (Milan, 32C/64T, 2.8Ghz Base, 3.7Ghz Boost) used from tugm4470 on ebay (this seller is highly regarded on Servethehome)

- Supermicro H12SSL-NT-O - new on Amazon

- 8x Hynix 64GB Registered DDR4-3200 - used on ebay from atechcomponents which seems to be a well rated seller

- Supermicro SNK-P0064AP4 (92mm Socket SP3 CPU cooler for 4U Cases) - new on amazon

I'm going to be doing some bench-top testing over the next few weeks before I take down the old server and go for the upgrade.

Did some basic assembly last night:

This is the part that no matter how many times I do it on a Threadripper (and now EPYC) always scares the **** out of me:

4094 hair-fine pins that unlike some others are irreparable if touched.

With my lock I'd drop the CPU on them, which is probably why they include that nifty secondary plastic cover.

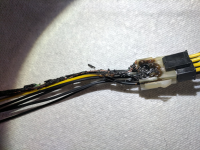

And a few minutes later. I wanted to do some benchtop testing last night, but apparently I no longer have a good PSU in my spare parts bin. I'm going to have to yank the one out of my testbench machine, but I just didn't feel like doing it last night.

I'm a little bit concerned about the clearance behind PCIe slot Slot three with those weird Supermicro plastic M.2 clips sticking up, but I'm hoping it will be fine. I'm also considering putting a couple of slim heatsinks on those m.2 drives to ensure they don't get too toasty, but the Amazon store page says they only stick up by ~3mm, so hopefully I'll be OK. Those are going to be my mirrored boot drives (Using ZFS). I'm going to be using at least 5 maybe 6 of those slots, so they can't be blocked. This depends on if I can get the funky SlimSAS ports to run my Optane u.2 drives. If I can, then I don't need my u.2 riser card. 3 of the slots will have 16x cards, the rest 8x cards.

It's a little weird to see such a petite CPU cooler on a 225W TDP CPU, but we are not overclocking here. As long as we stay under TJMax at full load I'll be happy. Even better if I can get the full advertised boost out of it.