- Joined

- May 28, 2019

- Messages

- 10,478

- Reaction score

- 7,367

The world's fastest gaming CPU, the AMD Ryzen 7 9800X3D, might be at the root of some unusual benchmark metrics for the NVIDIA RTX 5090.

See full article...

See full article...

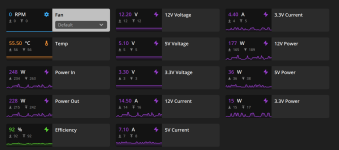

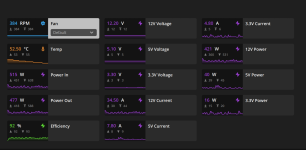

Mostly just by MSI AB but occasionally I'll take a look at what the UPS says for actual from the wall draw. It's rare when my 4090 rig pulls more than 600W but I think the most I've seen has been upwards of 750ish with real-world gaming and not just benchmarking to see something maxed out.How do you check your watts?

Pretty interesting results. I'd say it's not bad as just a differential between two cards. But it's assuming full TDP for "light to medium use" for your cards. Not sure what the idle draw on these cards are - that number is harder to find sometimes.I asked copilot. Not sure it got the idle and light to medium power usage right though. Anyone have a better model? Or perhaps a better question (that makes me think of the Hitchhikers guide to the Galaxy).

Per TechSpot:

RE: Marvel’s Spider-Man 2 Remastered: “At 4K, the CPU bottleneck is mostly removed, but the RTX 5090 still appears slightly limited, averaging 212 fps. As a result, the RTX 5090 is just 26% faster than the RTX 4090.”

j2c and hardware nexus use devices to specifically measure power draw and have posted numbers.Pretty interesting results. I'd say it's not bad as just a differential between two cards. But it's assuming full TDP for "light to medium use" for your cards. Not sure what the idle draw on these cards are - that number is harder to find sometimes.

They used those because they have a dozen of them they sourced to be similar in performence to allow parallel testing.Still seems off that ltt did their I initial review with 7800x3ds.

Fixed but coincidentally the 2nd game has also been reported as having a CPU bottleneck at 4K.Small correction:

TechSpot/HUB tested Spider-Man, not Spider-Man 2.

Is it physx causing that?Fixed but coincidentally the 2nd game has also been reported as having a CPU bottleneck at 4K.

Funny you should mention that. I was doing some DLSS 4 transformer model ray reconstruction testing this weekend with CP2077 and Hogwarts and noticed NV listed it in their copyright notices before one of those games. It was a blink and miss it moment but I was a little surprised.Is it physx causing that?