Yeah the QD's are nice if you swap components often. Mine is hard lined. I got lucky going from a 1080Ti with a EK block to a 2080Ti with a Alphacool block and not having to redo any lines.Tell me about it. That's why I invested in several koolance QDC's. This is actually the first time since installing them I am replacing a component. I'm excited!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

XFX and EKWB Launch Speedster Zero Radeon RX 6900 XT, Says It Can Reach 3 GHz When Overclocked

- Thread starter Peter_Brosdahl

- Start date

Zarathustra

Cloudless

- Joined

- Jun 19, 2019

- Messages

- 4,390

- Reaction score

- 4,732

Then I have to make a microcenter run.

Apparently I don't have enough fittings in my spare parts bin to hook this up to my spare XSPC pump/res for cleaning without disassembling the entire computer...

Huh...

Turns out Microcenter doesn't have compression fittings for 3/8 ID 1/2" OD tubing anymore.

They have 3/8ID 5/8OD and 1/2" ID 3/4" OD but nnot what I needed.

I was looking at ones that said they were 10mmID 12mmod, but it turns out they were hardtube parts with no inner barb :/

I was planning on using what I got as spares in the future, but instead I just got 4x barb tubes for the cleaning

Zarathustra

Cloudless

- Joined

- Jun 19, 2019

- Messages

- 4,390

- Reaction score

- 4,732

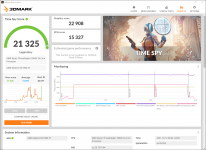

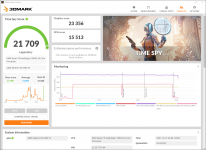

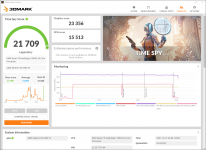

So, out of the box having done only the following:

1.) Updated BIOS to allow for Resizeable BAR/SAM

2.) Disabled SMT (because apparently 3DMark hates SMT on the Threadripper

I scored the following:

Here I am going to note that a 22k graphics score in timespy is faster than anyone has posted in the 6800/6900 overclocking thread over at the [H], and this is at stock settings. I have changed nothing. All clock speed, voltage, and power settings are at their out of box defaults.

I am also going to note, that I am not a fan of canned benchmarks. I don't play 3DMark, and as such it is not reflective of what I will get in game. What it is though is a good reference point for me to know if everything is working right, as there is a literal ton of Timespy data out there to compare to.

Either I have done something wrong in my testing, or I have the best 6900 ever in my possession.

Also worth noting. It does run a little hot. Like not "air cooler" hot, but much hotter than my old Pascal Titan X under water. For these runs I set the fan profiles to keep the coolant at ~33C. On my Pascal Titan X this resulted in in game temps of about 38C core temp. This beast is hovering at about 50C to 51C. Part of this is not unexpected. The Pascal Titan was a 250W TDP card. The 6900XT stock is 300W, but who knows how high they set it stock at XFX? The specs do not say does not say. I'll have to upgrade the monitoring software and measure during a run to see what it registers.

I'm wondering if they did a ****ty mounting/pasting job at the XFX factory, and if I should take off the block, and give it some Kryonaut goodness. (Really not looking forward to that...) Appreciate thoughts.

1.) Updated BIOS to allow for Resizeable BAR/SAM

2.) Disabled SMT (because apparently 3DMark hates SMT on the Threadripper

I scored the following:

Here I am going to note that a 22k graphics score in timespy is faster than anyone has posted in the 6800/6900 overclocking thread over at the [H], and this is at stock settings. I have changed nothing. All clock speed, voltage, and power settings are at their out of box defaults.

I am also going to note, that I am not a fan of canned benchmarks. I don't play 3DMark, and as such it is not reflective of what I will get in game. What it is though is a good reference point for me to know if everything is working right, as there is a literal ton of Timespy data out there to compare to.

Either I have done something wrong in my testing, or I have the best 6900 ever in my possession.

Also worth noting. It does run a little hot. Like not "air cooler" hot, but much hotter than my old Pascal Titan X under water. For these runs I set the fan profiles to keep the coolant at ~33C. On my Pascal Titan X this resulted in in game temps of about 38C core temp. This beast is hovering at about 50C to 51C. Part of this is not unexpected. The Pascal Titan was a 250W TDP card. The 6900XT stock is 300W, but who knows how high they set it stock at XFX? The specs do not say does not say. I'll have to upgrade the monitoring software and measure during a run to see what it registers.

I'm wondering if they did a ****ty mounting/pasting job at the XFX factory, and if I should take off the block, and give it some Kryonaut goodness. (Really not looking forward to that...) Appreciate thoughts.

Zarathustra

Cloudless

- Joined

- Jun 19, 2019

- Messages

- 4,390

- Reaction score

- 4,732

To follow up, I collected some additional metrics.

Based on the Radeon software's measured wattage during a Time Spy run, the out of box Power Limit seems set to 335W. That's the max power used I saw throughout the benchmark run, and it was fairly consistent, rarely dipping below 330.

I moved the power slider all the way to the right to add my 15% and re-ran the benchmark. As would be mathematically expected, this resulted in max power used at 385W, though not as consistent, it bounced up and down more, which suggests that with the 335W limit it was hitting the limit a lot, but at 385W it is sometimes, but not other times.

Increasing the power (but touching nothing else) yielded slightly higher numbers.

It also resulted in a 3C increase in core temps to a max of 54C.

I do want to continue tweaking, but I'm not 100% sure what my next best bet is. Maybe go for a higher core clock, while also trying to reduce voltage a little? And then getting MorePowerTool to override the power limit maybe? I'll have to think about it.

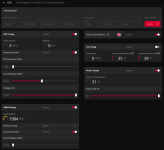

Oh, by the way, here are the default settings:

Based on the Radeon software's measured wattage during a Time Spy run, the out of box Power Limit seems set to 335W. That's the max power used I saw throughout the benchmark run, and it was fairly consistent, rarely dipping below 330.

I moved the power slider all the way to the right to add my 15% and re-ran the benchmark. As would be mathematically expected, this resulted in max power used at 385W, though not as consistent, it bounced up and down more, which suggests that with the 335W limit it was hitting the limit a lot, but at 385W it is sometimes, but not other times.

Increasing the power (but touching nothing else) yielded slightly higher numbers.

It also resulted in a 3C increase in core temps to a max of 54C.

I do want to continue tweaking, but I'm not 100% sure what my next best bet is. Maybe go for a higher core clock, while also trying to reduce voltage a little? And then getting MorePowerTool to override the power limit maybe? I'll have to think about it.

Oh, by the way, here are the default settings:

Last edited:

Zarathustra

Cloudless

- Joined

- Jun 19, 2019

- Messages

- 4,390

- Reaction score

- 4,732

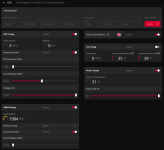

Did some more playing around with settings, but admittedly I don't really know what I'm doing. Here is the best I came up with:

Min Freq: 2450

Max Freq: 2650

Voltage: 1130 (anything lower would crash the driver or 3DMark or both)

Ram: 2125

Power Limit: +15% (which results in 385w)

Unlike other boards, the RAM slider on this one goes up to 3000. Doesn't help much through. At 2200 I have stuttering and some mild artifacts and a SIGNIFICANLY reduced score. At 2175 the stutter and artifacts are gone, but the score is still very bad. 2150 scores very slightly below 2100. The sweet spot for me seems to be 2125 on the RAM.

Not sure if the score losses are due to the RAM not performing well due to being pushed too hard, or if it is taking power away from the core due tot he power limit.

Here is my best score thus far:

I'm obviously happy with this, but it would be fun to push the graphics score into the 23000's

That's all I have time for tonight. I'm considering playing around with MorePowerTool, but since I'm already at 385w, I'm not sure how much higher it is wise to go. I still have plenty of thermal headroom though. Core temp is 52C in my latest run, with Junction Temp at around 67C.

Again, I'd appreciate any suggestions.

Min Freq: 2450

Max Freq: 2650

Voltage: 1130 (anything lower would crash the driver or 3DMark or both)

Ram: 2125

Power Limit: +15% (which results in 385w)

Unlike other boards, the RAM slider on this one goes up to 3000. Doesn't help much through. At 2200 I have stuttering and some mild artifacts and a SIGNIFICANLY reduced score. At 2175 the stutter and artifacts are gone, but the score is still very bad. 2150 scores very slightly below 2100. The sweet spot for me seems to be 2125 on the RAM.

Not sure if the score losses are due to the RAM not performing well due to being pushed too hard, or if it is taking power away from the core due tot he power limit.

Here is my best score thus far:

I'm obviously happy with this, but it would be fun to push the graphics score into the 23000's

That's all I have time for tonight. I'm considering playing around with MorePowerTool, but since I'm already at 385w, I'm not sure how much higher it is wise to go. I still have plenty of thermal headroom though. Core temp is 52C in my latest run, with Junction Temp at around 67C.

Again, I'd appreciate any suggestions.

Zarathustra

Cloudless

- Joined

- Jun 19, 2019

- Messages

- 4,390

- Reaction score

- 4,732

Alright, I think I've reached the limit for this one, at least without going to extreme measures.

Once I loaded up MorePowerTool I found that the stock power limit was 332W (odd choice) and since I had already tested +15%, that meant I had already tested up to 382W, so I just set 382 as the new base power limit (giving me the option to go +15% over that in the drivers.

Best results I've been able to get thus far are at:

MinFreq: 2575 Mhz

MaxFreq: 2725 Mhz

Volt: 1140mv

VRAM: 2126Mhz

Power Limit: +15% (439W)

link

Not too shabby if I may say so myself.

I kept an eye on the power draw and it actually did draw all the way up to 437w at one point! No wonder this room is getting warm.

I tried going up to a MaxFreq of 2750 but that resulted in crashes. I stepped the voltage all the way up to 1200mv but that didn't help, and I didn't really want to go above that.

So I think I've had my fill of canned benchmarks for a while. Now I need to find myself a game to play

Once I loaded up MorePowerTool I found that the stock power limit was 332W (odd choice) and since I had already tested +15%, that meant I had already tested up to 382W, so I just set 382 as the new base power limit (giving me the option to go +15% over that in the drivers.

Best results I've been able to get thus far are at:

MinFreq: 2575 Mhz

MaxFreq: 2725 Mhz

Volt: 1140mv

VRAM: 2126Mhz

Power Limit: +15% (439W)

link

Not too shabby if I may say so myself.

I kept an eye on the power draw and it actually did draw all the way up to 437w at one point! No wonder this room is getting warm.

I tried going up to a MaxFreq of 2750 but that resulted in crashes. I stepped the voltage all the way up to 1200mv but that didn't help, and I didn't really want to go above that.

So I think I've had my fill of canned benchmarks for a while. Now I need to find myself a game to play

Zarathustra

Cloudless

- Joined

- Jun 19, 2019

- Messages

- 4,390

- Reaction score

- 4,732

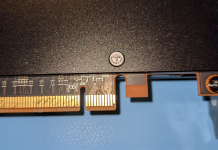

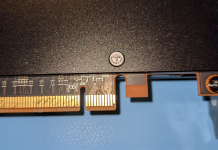

Alright, so I finally got around to taking the cooler off this thing. Anyone ready for some nudez?

I simply disconnected my QDC's and did this with fluid still in the block. A little non-conventional, but I wasn't planning on opening the block itself, so I figured it was fine. It did cause a few difficulties in getting a flat surface to work on, but I made it work.

Also, I don't usually use antistatic mats and wristbands, but I've learned more about ESD over time, and my old argument of "well in my 30 years of doing this I've never killed anything" doesn't really mean anything. ESD is not all or nothing. You can zap something by toughin it just enough to weaken it, and have it fail as a result years later. This is also my most expensive component to date, so I figured better safe than sorry.

So, first we have to take the massive backplate off. Seven screws is all it takes, one of them covered in one of those "Warranty void if removed stickers"

Nice try XFX, we all know that is illegal by now.

Side note, no idea why the PCIe contacts are so scratched. I can't think of anything I might have done that would have done that. I'm guessing it must have happened at the plant?

Either way, they work just fine, so I am not concerned.

7 screws removed and some gentle prying with my fingers later and the backplate is off:

Some pretty decent putty style thermal pads covering all the hot components on the back. I'd call this an A+ on XFX's part. Doesn't really get much better than this from what I have seen.

Now we've revealed the ~16 (I think?) remaining screws that need to be removed to get the block off. In order to remember which screw holes were used by the backplate so I didn't accidentally populate them during reassembly, I highlighted them in the next pic.

Now, for the moment we've all been waiting for. The full frontal nudez.

My two comments are as follows:

1.) GPU looks well pasted. Maybe a little excess, but not too bad. I probably wasted my time in taking this off, but at least now I know. I've seen some horror stories so I had to make sure, especially since it was running a bit warmer than I was used to with my old Pascal Titan X. Maybe that's just a 6900xt thing, or especially THIS 6900xt. It does pull a lot more power than my old Pascal Titan X did.

2.) Those VRM's DO NOT look like they are touching the thermal pads in the grooves on the block. There were no real indentations on the thermal putty/pads except for like two of them in the corner.

Alright, so I wiped down the GPU area of the block and the GPU itself with isopropyl alcohol, and reapplied Thermal Grizzly Kryonaut using the "rub it on using a nitrile glove" method. Thermal Grizzly Kryonaut is pretty thick when cold, so I heated the tube with a hair dryer before starting to make it easier.

I also rubbed some thermal grizzly on top of the VRM's to see if I could make them contact the thermal pads. A little unorthodox I know, but I figured it was better than nothing. (I didn't have any good thermal pads to replace them with.)

The result?

Despite the paste job looking pretty good as it was, the GPU does run a lot cooler now.

At first I didn't think it was. My first run through the temps were as high as they were previously, but I guess the Thermal Grizzly Kryonaut just needed to warm up and spread out a bit, because in the second run and beyond the temps settled down nicely.

Previously at max overclock in TimeSpy I was hitting 56C core, 71C Junction, now that is down to 46C core, 60C Junction.

At stock settings it was hitting ~51-52 core (can't remember junction) so this is cooler even than stock settings.

I have had one weird side effect through. I've had to back off about 50-75Mhz on the main clocks to remain stable. I'm not quite sure why. Temps are obviously better, and I don't think I damaged anything, because these things are either dead or not if you damage them, not just slightly reduced max overclock.

My best guess is that AMD's automagic logic is trying to reduce the voltage due to the lower temps, but doing so too much resulting in lack of stability.

I am going to have to tinker with the clocks and voltages to see if I can get back up to where I was.

I simply disconnected my QDC's and did this with fluid still in the block. A little non-conventional, but I wasn't planning on opening the block itself, so I figured it was fine. It did cause a few difficulties in getting a flat surface to work on, but I made it work.

Also, I don't usually use antistatic mats and wristbands, but I've learned more about ESD over time, and my old argument of "well in my 30 years of doing this I've never killed anything" doesn't really mean anything. ESD is not all or nothing. You can zap something by toughin it just enough to weaken it, and have it fail as a result years later. This is also my most expensive component to date, so I figured better safe than sorry.

So, first we have to take the massive backplate off. Seven screws is all it takes, one of them covered in one of those "Warranty void if removed stickers"

Nice try XFX, we all know that is illegal by now.

Side note, no idea why the PCIe contacts are so scratched. I can't think of anything I might have done that would have done that. I'm guessing it must have happened at the plant?

Either way, they work just fine, so I am not concerned.

7 screws removed and some gentle prying with my fingers later and the backplate is off:

Some pretty decent putty style thermal pads covering all the hot components on the back. I'd call this an A+ on XFX's part. Doesn't really get much better than this from what I have seen.

Now we've revealed the ~16 (I think?) remaining screws that need to be removed to get the block off. In order to remember which screw holes were used by the backplate so I didn't accidentally populate them during reassembly, I highlighted them in the next pic.

Now, for the moment we've all been waiting for. The full frontal nudez.

My two comments are as follows:

1.) GPU looks well pasted. Maybe a little excess, but not too bad. I probably wasted my time in taking this off, but at least now I know. I've seen some horror stories so I had to make sure, especially since it was running a bit warmer than I was used to with my old Pascal Titan X. Maybe that's just a 6900xt thing, or especially THIS 6900xt. It does pull a lot more power than my old Pascal Titan X did.

2.) Those VRM's DO NOT look like they are touching the thermal pads in the grooves on the block. There were no real indentations on the thermal putty/pads except for like two of them in the corner.

Alright, so I wiped down the GPU area of the block and the GPU itself with isopropyl alcohol, and reapplied Thermal Grizzly Kryonaut using the "rub it on using a nitrile glove" method. Thermal Grizzly Kryonaut is pretty thick when cold, so I heated the tube with a hair dryer before starting to make it easier.

I also rubbed some thermal grizzly on top of the VRM's to see if I could make them contact the thermal pads. A little unorthodox I know, but I figured it was better than nothing. (I didn't have any good thermal pads to replace them with.)

The result?

Despite the paste job looking pretty good as it was, the GPU does run a lot cooler now.

At first I didn't think it was. My first run through the temps were as high as they were previously, but I guess the Thermal Grizzly Kryonaut just needed to warm up and spread out a bit, because in the second run and beyond the temps settled down nicely.

Previously at max overclock in TimeSpy I was hitting 56C core, 71C Junction, now that is down to 46C core, 60C Junction.

At stock settings it was hitting ~51-52 core (can't remember junction) so this is cooler even than stock settings.

I have had one weird side effect through. I've had to back off about 50-75Mhz on the main clocks to remain stable. I'm not quite sure why. Temps are obviously better, and I don't think I damaged anything, because these things are either dead or not if you damage them, not just slightly reduced max overclock.

My best guess is that AMD's automagic logic is trying to reduce the voltage due to the lower temps, but doing so too much resulting in lack of stability.

I am going to have to tinker with the clocks and voltages to see if I can get back up to where I was.

Zarathustra

Cloudless

- Joined

- Jun 19, 2019

- Messages

- 4,390

- Reaction score

- 4,732