I think the lack of APUs for gaming laptops has more to do, at least in the case of AMD, with their contracts with consoles. Its only now (upcoming months actually) that RDNA2 APUs are being released. I do think there IS a market for gaming APUs that can rival consoles. And I see intel going that route as they are not limited by any console contracts.

It's a fine theory, but realistically it's just as easily explained for there not being a large potential market. A big part of that is based in what you already see - APU performance that would approach dGPU performance would need dGPU levels of memory bandwidth. DDR5 gets

a lot closer to that, but it's still a fraction of what a decent entry-level dGPU can bring. Thing is, APUs generally go into entry-level systems, meaning that for now it's a hard business-case to make, most especially when AMD is already supply constrained at TSMC.

Thing is, nobody "wants" a high powered APU. Especially at the prices Apple is charging.

Actually? People are

loving Apple's APU, even at the prices they're charging. Thing is, Apple's APU has gobs of memory bandwidth to share between the CPU and GPU like AMD's console APUs do, but unlike any desktop-class APU. Apple's GPUs then are able to put out dGPU-class performance numbers, whereas any APU using a standard desktop memory controller is going to be severely bandwidth limited in comparison.

If they are targeting people doing very specific tasks and workloads that is a very niche market of people that want/need the ability to upgrade their GPU as often as they want.

Well, they're targeting content creators, from the consumer to amateur to professional level. For that 'niche' market, Apple has tuned their entire hardware and software stack exceptionally well. And when you think about it, from a broad computer market perspective, that's the heaviest work that most people do. Apple is definitely on point when it comes to shipping high-performance systems.

At least that's what I've heard from a number of architectural, structural, electrical, civil, nuclear, etc engineers that my wife works with. None of those guys are even remotely interested in Apple products.

I mean, sure? When you get

that niche, you're likely looking at what is best suited to your very specific workload. I'd imagine Windows or Linux and leaning heavy on CUDA and OpenCL. Apple's walled garden with custom APIs is likely a pretty big turnoff, as would be the inability to upgrade GPUs / Compute Engines.

Then again, this is Apple, and there will be a lot of people who buy one just to brag about having one and not actually needing one.

I'm sure that's prevalent, but the thing is, Apple is making the best laptops one can buy, and some of the best

computers one can buy, assuming that desktop gaming isn't a priority.

I say that as I've been contemplating both a 14" MBP, because it'd run circles around my 15" XPS while being lighter, quieter, and having over twice the battery life, and

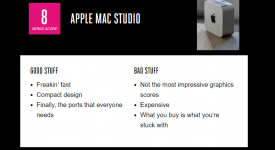

also contemplating the latest 'Mac Studio', but in a minimal configuration. Thinking about it now, I'd probably just go with a Macbook Pro, but having a 10Gbit interface on the Studio is tempting!