As stated in the review: "

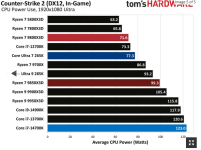

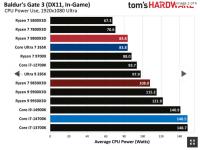

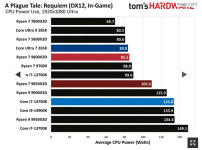

This is the only review showing less power use vs the 9800x3d, all other tests show the 9850x3d consuming more power.

View attachment 4358View attachment 4359View attachment 4360

As stated in the review:

"On this page, we are going to test the power draw on the CPUs, testing multi-core performance in Cinbench 2026 running for 15 minutes."

The method I used (and always have used) is a simple Cinebench Multi-Core, full-load, power test. I run Cinebench on the multi-core benchmark for at least 15 minutes, looping. I have HWiNFO up on the screen, and monitor the "Package Power" reported. I then graph the maximum or peak power number represented.

I do not claim that this is a thorough or detailed power test. It is meant to verify or validate all-core power loads and report on the quoted or rated TDP from AMD and Intel. I am aware that TDP is the thermal design power recommendation for cooling.

I do claim this is a valid measurement, and a real-world use-case scenerio that an end user would realistically encounter in real-world usage. For example, if you are editing and encoding or transcoding video in a video editor, or running 3D renders, or anything that pushes all cores to the max, this is the power you would experience in a real-world use case.

This is different than testing power while gaming. This is not showing power while gaming. I have done that in the past, on certain CPUs, but this was not that. You will note that Tom's testing was in games, which has a very different load than what I was doing. I was using productivity-based testing.

In addition, I am aware there are more accurate ways to capture power usage, but our reviews don't focus on that aspect. This method shows the 'cap' that the motherboard BIOS sets for package power. We can see that power is above the "TDP" number they provide, and we can see where it caps out with this method. I encourage you to look at other reviews for more detailed power testing and analysis.

I hope that helps.