Image: NVIDIA

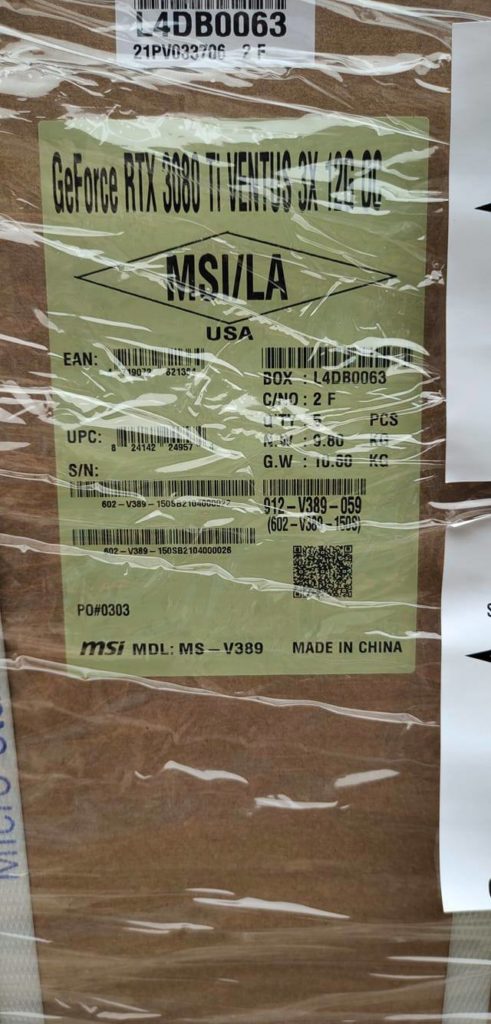

Contrary to earlier reports suggesting that NVIDIA’s GeForce RTX 3080 Ti graphics cards have only just entered mass manufacturing, a user on Facebook has shared images of shipments from MSI confirming that plenty of units of the highly anticipated Titanium variant have already been produced. What’s even better is that the labels on the images confirm the memory configuration of the GeForce RTX 3080 Ti. Despite previous speculation about 20 GB or 16 GB of RAM, NVIDIA’s new Ampere flagship GPU will feature just 12 GB of GDDR6X memory—2 GB more than the standard GeForce RTX 3080, but quite a bit less than green team’s BFGPU, the GeForce RTX 3090 (24 GB).

NVIDIA GeForce RTX 3080 Ti is the upcoming enthusiast...

Continue reading...