You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

RX6000 preview

- Thread starter Stoly

- Start date

Closer than I imagined, but not that close. I think the 3080 gets closer to 100FPS average at 4K in CoD:MW. However, it does look like it will compete nicely with the 3070 and it will certainly be faster than the RTX 2080 Ti.

Last edited:

Around 20% faster than the 2080 Ti, which would slot it right in between the 3070 and 3080. I wonder if given the recent 1070 Ti and 2070 SUPER releases that AMD is aiming for that potential product release from NVIDIA instead of going at the current $500 product. Would fit with the rumored $549-$599 price point.

Last edited:

Around 20% faster than the 2080 Ti, which would slot it right in between the 3070 and 3080. I wonder if given the recent 1070 Ti and 2070 SUPER releases that AMD is aiming for that potential product release from NVIDIA instead of going at the current $500 product. Would fit with the rumored $549-$599 price point.

I think that they are. If I was going to put a tinfoil hat on, I'd also suggest that this is why NVIDIA priced the 3080 10GB the way they did. They might have known roughly where Big Navi was going to fall and needed to price the 3080 aggressively. Sitting in between the 3070 and 3080, the Radeon 6000 series could certainly be priced higher than the 3070, but NVIDIA would have a hard time justifying a 3080 that was priced like the RTX 2080 Ti was.

That was like my best case scenario. I expected it to be closer to the 3070 than the 3080. Well, we'll see soon enough.Closer than I imagined, but not that close. I think the 3080 gets closer to 100FPS average at 4K in CoD:MW. However, it does look like it will compete nicely with the 3070 and it will certainly be faster than the RTX 2080 Ti.

BTW it seems like no DLSS equivalent? Or could AMD be saving it till the official relesase. Or maybe this IS the DLSS equivalent...

10% slower than the 3080 at $100 less is not gonna sell a ton of cards.

Some for sure but it's simply not gonna dent the marketshare.

AMD is really banging hard on the gaming CPU drum today, probably because the GPU is not going to upset anything.

Some for sure but it's simply not gonna dent the marketshare.

AMD is really banging hard on the gaming CPU drum today, probably because the GPU is not going to upset anything.

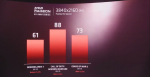

Gears 5 at 4K 3080 (72) vs AMD RX 6000 (73), both Ultra settings -- which AMD 6000 is also unknown (per Scott Herkelmann stressed this for all benchmarks) and of course still not head to head in same rig.

CoD MW benchmarks (most) are around (80), with (85) being best I've seen at The Verge -- So RX 6000 being (88) is not too bad for CoD MW.

Borderlands 3 4k at TechPowerUp the 3080 is (70) frames vs AMD claims RX 6000 (61) -- sort interesting here as always thought this was game that favored AMD.

Normally I don't bother looking at 4K results.. sure there are many others.

Attachments

Last edited:

Brian_B

Forum Posting Supreme

- Joined

- May 28, 2019

- Messages

- 8,469

- Reaction score

- 7,170

10% slower and $100 less and in stock will though10% slower than the 3080 at $100 less is not gonna sell a ton of cards.

that a remains to be seen yet

10% slower than the 3080 at $100 less is not gonna sell a ton of cards.

Some for sure but it's simply not gonna dent the marketshare.

AMD is really banging hard on the gaming CPU drum today, probably because the GPU is not going to upset anything.

Don't forget, the Radeon 6000 series should offer more VRAM than the 3080 does. If its only 10% slower and the things are readily available, I can see people going for them.

- Joined

- Jul 11, 2019

- Messages

- 762

- Reaction score

- 506

Honestly if they can get them out in quantity then they have an attractive product as long as it competes with the 3080 in some titles. This is as long as NVIDIA struggles to produce more inventory for the channel.

Only if the VRAM becomes a real advantage in proven tests/reviews.Don't forget, the Radeon 6000 series should offer more VRAM than the 3080 does. If its only 10% slower and the things are readily available, I can see people going for them.

That sweet 16 the R7 had did jackfucknuthin for sales in the long run.....not talking about compute either, it WAS marketed as a gaming GPU.

I don't think capacity is going to be a problem, but the bandwidth is. We saw Turing being bandwidth starved, especially with ray tracing.Only if the VRAM becomes a real advantage in proven tests/reviews.

That sweet 16 the R7 had did jackfucknuthin for sales in the long run.....not talking about compute either, it WAS marketed as a gaming GPU.

Ready4Droid

Sort-of-Regular

- Joined

- Jul 9, 2019

- Messages

- 132

- Reaction score

- 93

Um... when did the 3080 get 100FPS @ 4k? Oh, I see, you're comparing HIGH settings on NVIDIA to Ultra on AMD, lol. Check out benchmarks, yes NVIDIA is around 100FPS when you run it at high settings. It's < 90FPS on ultra, which puts it almost exactly the same. As we know the setup could make some difference so we'll have to wait for some real benchmarks with direct comparisons, but you're memory is correct in that the 3080 does hit 100FPS, but it's not at the settings tested so the comparison isn't really accurate.Closer than I imagined, but not that close. I think the 3080 gets closer to 100FPS average at 4K in CoD:MW. However, it does look like it will compete nicely with the 3070 and it will certainly be faster than the RTX 2080 Ti.

"At 4K we set the settings all to High." - Average frame rate = 101fps

https://wccftech.com/review/nvidia-geforce-rtx-3080-10-gb-ampere-graphics-card-review/13/

Average framerate - 85.5fps

https://www.back2gaming.com/reviews...080-founders-edition-graphics-card-review/12/

So as you can see, depending on exact settings, it is much closer than what you are thinking.

Um... when did the 3080 get 100FPS @ 4k? Oh, I see, you're comparing HIGH settings on NVIDIA to Ultra on AMD, lol. Check out benchmarks, yes NVIDIA is around 100FPS when you run it at high settings. It's < 90FPS on ultra, which puts it almost exactly the same. As we know the setup could make some difference so we'll have to wait for some real benchmarks with direct comparisons, but you're memory is correct in that the 3080 does hit 100FPS, but it's not at the settings tested so the comparison isn't really accurate.

"At 4K we set the settings all to High." - Average frame rate = 101fps

https://wccftech.com/review/nvidia-geforce-rtx-3080-10-gb-ampere-graphics-card-review/13/

Average framerate - 85.5fps

https://www.back2gaming.com/reviews...080-founders-edition-graphics-card-review/12/

So as you can see, depending on exact settings, it is much closer than what you are thinking.

No, I'm not. I Googled CoD4:M4 RTX 3080 benchmarks. I found a couple showing around 100FPS in the game. I don't recall what the settings were for each game. The point is that without knowing the precise details of each system configuration. CoD benchmarks aren't comparable as there isn't a built in benchmark for the game. Having said that, I've done a lot of benchmarking (not of a 3080), and unless one level, map or area is particularly bad you can potentially ballpark it and make a comparison.

Having said that, my thoughts have changed on the potential of RX6000. Basically, it's this: You don't put 16 or 20GB of RAM on a card that's only going to perform like a 3070 or slightly better than a RTX 2080 Ti. We have seen jack **** for benchmarks out of the RX6000 series but they could have a part that does compete more with a 3080 or even a 3090 than we realize.

AMD has historically not put as much RAM on their cards as NVIDIA as they've been stuck with the higher priced HBM memory. I don't know what they are doing here, but my guess is that these will be more comparable than initially thought. Even if they are equal or slightly better, there are still a couple issues. Primarily, software. AMD also needs something comparable to DLSS. It needs ray tracing support and some other things. So while the performance may be on par (which remains to be seen), they'll have to do a lot to make them feature comparable. So far, AMD has floundered on that front.

I've used FreeSync / G-Sync compatible monitors (I own a couple) and hardware based G-Sync. The latter is smoother and I can tell the difference. It would take a lot to make me go with an AMD solution. Let's hope they can pull it off and change my mind about that. Let's also hope that the pricing is comparable or significantly better than NVIDIA's.

Ready4Droid

Sort-of-Regular

- Joined

- Jul 9, 2019

- Messages

- 132

- Reaction score

- 93

The settings show High when I've seen 100fps and the 85FPS I linked said Ultra... the screenshot from AMD has Ultra listed, so we know it was not High, but as I (and you) mentioned, individual settings and setup may still vary so we really wont know until direct benchmarks at exact settings are performed, but I do know comparing High settings to Ultra is not really fair.No, I'm not. I Googled CoD4:M4 RTX 3080 benchmarks. I found a couple showing around 100FPS in the game. I don't recall what the settings were for each game. The point is that without knowing the precise details of each system configuration. CoD benchmarks aren't comparable as there isn't a built in benchmark for the game. Having said that, I've done a lot of benchmarking (not of a 3080), and unless one level, map or area is particularly bad you can potentially ballpark it and make a comparison.

Having said that, my thoughts have changed on the potential of RX6000. Basically, it's this: You don't put 16 or 20GB of RAM on a card that's only going to perform like a 3070 or slightly better than a RTX 2080 Ti. We have seen jack **** for benchmarks out of the RX6000 series but they could have a part that does compete more with a 3080 or even a 3090 than we realize.

AMD has historically not put as much RAM on their cards as NVIDIA as they've been stuck with the higher priced HBM memory. I don't know what they are doing here, but my guess is that these will be more comparable than initially thought. Even if they are equal or slightly better, there are still a couple issues. Primarily, software. AMD also needs something comparable to DLSS. It needs ray tracing support and some other things. So while the performance may be on par (which remains to be seen), they'll have to do a lot to make them feature comparable. So far, AMD has floundered on that front.

I've used FreeSync / G-Sync compatible monitors (I own a couple) and hardware based G-Sync. The latter is smoother and I can tell the difference. It would take a lot to make me go with an AMD solution. Let's hope they can pull it off and change my mind about that. Let's also hope that the pricing is comparable or significantly better than NVIDIA's.

Yeah, I feel putting 3070 performance in with 16GB would be a waste of money where they could have put less and competed well with price/performance. Putting in 16GB limits their ability to lower prices and still maintain margins as they are trying to improve in this metric.

I have a suspicious feeling we'll be hearing more about DirectML in the near future

I think a lot of people will be surprised at how well AMD is able to compete, given how many times they've let us down in the past it will make it all the more shocking

https://www.overclockersclub.com/reviews/nvidia_geforce_rtx3080_founders_edition/6.htmUm... when did the 3080 get 100FPS @ 4k? Oh, I see, you're comparing HIGH settings on NVIDIA to Ultra on AMD, lol. Check out benchmarks, yes NVIDIA is around 100FPS when you run it at high settings. It's < 90FPS on ultra, which puts it almost exactly the same. As we know the setup could make some difference so we'll have to wait for some real benchmarks with direct comparisons, but you're memory is correct in that the 3080 does hit 100FPS, but it's not at the settings tested so the comparison isn't really accurate.

"At 4K we set the settings all to High." - Average frame rate = 101fps

https://wccftech.com/review/nvidia-geforce-rtx-3080-10-gb-ampere-graphics-card-review/13/

Average framerate - 85.5fps

https://www.back2gaming.com/reviews...080-founders-edition-graphics-card-review/12/

So as you can see, depending on exact settings, it is much closer than what you are thinking.

That's what I remembered seeing. In any case, we'd need to see both a precise breakdown of the actual in game settings (just calling it max isn't enough) and we need to know what AMD used for their benchmark platform. Was it a 9900K system? Ryzen 9 3950X? Or was it the upcoming Ryzen 9 5950X or some other 5000 series CPU? What were the memory settings for said CPU? You see the problem?

The benchmark numbers AMD released are encouraging but these companies tend to cherry pick what they show the public in situations like this. AMD is no different.

The benchmark numbers AMD released are encouraging but these companies tend to cherry pick what they show the public in situations like this. AMD is no different.

At this stage I don't see much reason for hype.

AMD will more or less match raster perf to Nvidia, sell it for same or possibly 5% less and have decent RT with some form of half baked DLSS type solution.

The main reason to buy a Radeon GPU will still remain the "I don't like Nvidia" thing. Pretty simple choice if the specs are somewhat close between the two.

AMD will more or less match raster perf to Nvidia, sell it for same or possibly 5% less and have decent RT with some form of half baked DLSS type solution.

The main reason to buy a Radeon GPU will still remain the "I don't like Nvidia" thing. Pretty simple choice if the specs are somewhat close between the two.

Sorry to say it, but I think you're right. ATi, even after being acquired by AMD, tended to innovate more on the software side which made their products compelling (in addition to better color compression for a good while). These days AMD seem to just be following NVIDIA in a "me, too" fashion and not doing much better in implementation.At this stage I don't see much reason for hype.

AMD will more or less match raster perf to Nvidia, sell it for same or possibly 5% less and have decent RT with some form of half baked DLSS type solution.

The main reason to buy a Radeon GPU will still remain the "I don't like Nvidia" thing. Pretty simple choice if the specs are somewhat close between the two.

Ready4Droid

Sort-of-Regular

- Joined

- Jul 9, 2019

- Messages

- 132

- Reaction score

- 93

Yeah, I guess Ultra doesn't mean much in the scheme of things, it's just most of the things I found near 100fps where not on max but high. Who knows what AMD is calling Ultra or w/e, as mentioned it makes it really hard to compare. What we can say is AMD is anywhere from faster by a few FPS to slower by a good bit, lol. AMD benchmarks were with a 9900k, and I think they said 3600mhz memory but I'm not really to sure on that one. I do know the problem and as I said we really have to wait till benchmarks that are comparable come out, but I just wanted to point out that 3080 with most settings turned up is ~85FPS, so a blanket statement of the 3080 runs at around 100fps is really dependent on setup and what settings are on/off. I was trying to point out that we can't really draw comparisons, not that the 85fps was the only comparison, my point was that it could be compared to anything in between and we won't know until they come out and/or embargo released.That's what I remembered seeing. In any case, we'd need to see both a precise breakdown of the actual in game settings (just calling it max isn't enough) and we need to know what AMD used for their benchmark platform. Was it a 9900K system? Ryzen 9 3950X? Or was it the upcoming Ryzen 9 5950X or some other 5000 series CPU? What were the memory settings for said CPU? You see the problem?

The benchmark numbers AMD released are encouraging but these companies tend to cherry pick what they show the public in situations like this. AMD is no different.

At this stage I don't see much reason for hype.

AMD will more or less match raster perf to Nvidia, sell it for same or possibly 5% less and have decent RT with some form of half baked DLSS type solution.

The main reason to buy a Radeon GPU will still remain the "I don't like Nvidia" thing. Pretty simple choice if the specs are somewhat close between the two.

Hype is relative, I just like the competition. Whether or not it is "worth" buying will differ per person and is highly dependent on $/perf which we can still only speculate on. I hope AMD comes out with something in DirectML to compete with DLSS and we'll see where RT performance falls. Most indications are it'll be ~2080ti so a bit slower than 3080, but not useless either. Of course, this isn't verified in any way shape or form. I typically don't buy top end as I to many PC's to keep up with to spend that much on a single one, so I am more interested in the mid-low level competition, but I do like to keep up to date on the high end stuff and once in a while I'll buy if it feels like a really good deal. I'm not super hyped or anything, but I feel they will come a bit closer than most were giving credit not to long ago, and as I said competition is good to have.