Hmm do they?

TDP is listed as 450 for the 3090Ti, 4090 is listed as the same.

Now, there is a difference between published TDP and what you see real world, and of course overclocks throw all that out the window. But at least on paper it looks like they are about the same, without having one of each to look at power draw side by side.

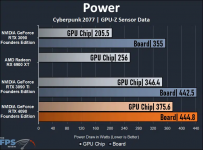

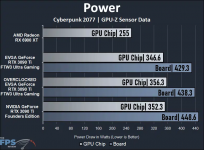

Here's as close as I can come, from your reviews - looks like 4090 is higher, but only by a small margin - 35W or so - on just the chip, but total board power is almost identical and that's what is going to matter on the power supply draw. Straw met Camel, or something else?

Also, shouldn't these cards be able to get up to 75W through the PCI connector itself? CPU-Z probably can't tell where the power is coming from specifically, just total power used.

View attachment 2018

[/URL]