Zarathustra

Cloudless

- Joined

- Jun 19, 2019

- Messages

- 4,407

- Reaction score

- 4,753

HEDT used to have really long legs. I had friends that built i7-920s and Sandy Bridge Es that stretched them for 10 years (or more!) and they could get away with that through strategic upgrades and just the benefits from the platform itself. AM4 had a pretty good lifespan for the friends that built with it, but it didn't compare to those older HEDT platforms. Honestly, I'd love to be able to recommend a low core count Threadripper platform if it could get a few generations of CPU upgrades and then have all that extra memory bandwidth and PCIE lanes so it could be strategically upgraded for 10-12+ years.

I used my x79 Sandy-E 3930k for 8 years. It overclocked to a - then - ungodly 4.8Ghz which made it untouchable at the time.

I still use it as a test bench machine.

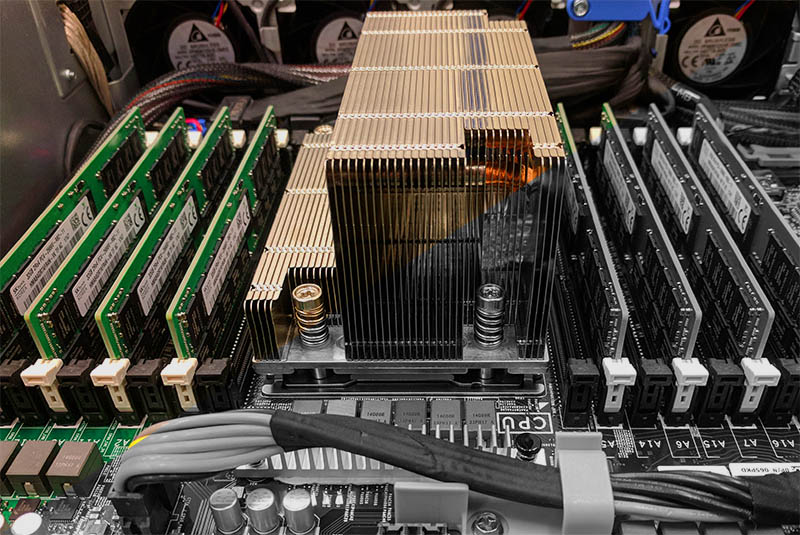

As far as Threadripper goes, I am still undecided. I still like the many PCIe lanes and workstation class motherboards without racing heatsinks, fancy paint jobs or RGB LED's, but the cost is difficult to justify....

Especially when you can upgrade to the next generation drop in Zen chip every couple of years for only $350.

The value proposition just isn't there.