Zarathustra

Cloudless

- Joined

- Jun 19, 2019

- Messages

- 4,336

- Points

- 113

Hey,

I figured I'd start my own thread rather than pull the 4070TI review even further off topic.

If anyone cares about my progress with this GPU, I'll post it here.

First, I'll quote the history from the other thread:

Alright, with that out of the way, I got a surprise knock at the door today. It was UPS.

As @Niner51 suggested, without any tracking history it showed up at my door, two days earlier than predicted at that, so of course I had to play with it a little.

I don't have my water block yet, so I am not sticking it in my main rig, but this is what I ahve a testbench machine for, to test new hardware offline before I take the server or desktop down for an upgrade. And I definitely want to test and make sure the GPU is working properly before I take the cooler off and install the water block when it arrives.

I just noticed I didn't take any pictures of the GPU out of the system. I will have to remedy that when the waterblock arrives.

Anyway, pictures do not do the size of these 4090's justice. This thing is HUGE.

The Phantecs Enthoo Pro my test bench machine resides in is a large case, and it still wasnt entirely straight forward to get it in.

I had to remove the drive bays, and slide the GPU in at an angle into the space they used to sit in, then press it into the slot.

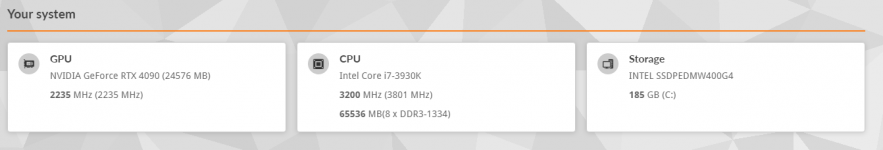

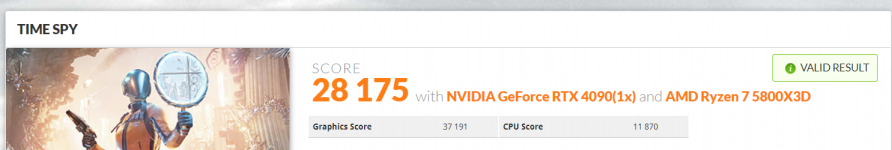

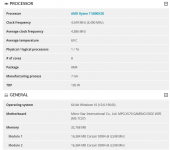

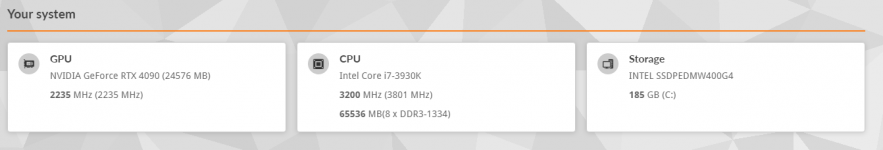

So, I bet this is one of the more unusual CPU/GPU combinations that is now in the TimeSpy Database:

I figured I'd start my own thread rather than pull the 4070TI review even further off topic.

If anyone cares about my progress with this GPU, I'll post it here.

First, I'll quote the history from the other thread:

Makes the $1900 I just spent on my 4090 seem cheap. This sucks. I don't mind the top-tier cards costing a chunk, although not thrilled, but these should not be like that at all. I remember being jealous of the folks that got Titans back in the day while I scrambled SLI with 560Ti's and 980s but this is just wrong.

I was thinking the same thing.

I have an MSI Gaming X Trio 24G and an EK waterblock incoming.

I'm sure you're going to love it. The 4090 is everything they make it out to be. I was hesitant at first and originally planned to wait it out for the Ti/Titan or whatever comes next but Brent's review won me over, and I really don't want to wait 18+ months in hopes of getting one. If I hadn't gotten lucky in getting mine I probably would've just held out but I am happy. Not only does it significantly outperform the 3090 Ti but manages to do so at the same or less power in a lot of testing I've done. For giggles, I've been using mild OC settings (clock at 2860-2885 MHz and mem at 11,000 MH w/ power +107%) and in most games, the liquid X keeps it 60 or under w/ 30-50% fans while AB says its drawing 385-425W. Just to hammer it I tested RE3 Remastered and cranked IQ to 200%, saw it eat 18GB of VRAM, and then pulled 500-530W with the same settings while holding 50-55 FPS at 4K. The game looked incredible at that point too. I did a lot of testing with it and once you slap that block on it'll take an already impressive card to another level. I tested Crysis Remastered and that was fun too. It really is the best 4K card yet and based on all these power comparisons for the other Ampere cards I am impressed with the design but it really sucks with what is happening with supplies.

Yeah, I was thinking similarly. I only very recently spent money on a rather expensive 6900xt, but I knew that was a risk.

The 4090 appears as if it is an all holds barred approach to getting the most out of this gen, and because of that I think it might just have some staying power. I'm cautiously optimistic for a similar experience to my Pascal Titan X I had from 2016 to 2021.

Admittedly I held on to that Titan a little but longer than I had planned. I balked at the 2080ti when the "space invaders" problem reared its ugly head, and by the time it was solved, I thought it was way too late in the product cycle to spend big bucks on a top end card.

With the 4090 - however - I'm hoping I will be happy for at least 2-3 years.

Well, after some more research, I may have bought the wrong version of the 4090 for my intents. :/

Apparently the MSI Gaming X has a power limit of ~450w, which is pretty wimpy as 4090's go.

Beggars can't be choosers though. The only ones I could find in stock without feeding scalpers too much were this one, and a Zotac model, and I'm not sure I trust Zotac for high end boards.

I'm still putting a water block on it. Hopefully I can achieve some decent overclocks by undervolting :/

Pretty sure you'll be able to hang in the 2800s still. From what I've read anything over 500w is mostly wasted anyways. If you trust it enough you might be able to flash custom bios for more but I wouldn't recommend it. Yeah, when I started researching these I was really shocked about all the wattage variants that are out there and even then there are other tradeoffs with them. The GIGABYTE with its AIO is one of the highest but came in 2nd on some tests. Either way, at stock these things are still beasts and the OC gains are minimal at best and the extra power draw isn't usually equitable. I'd say the settings I'm using are close to 1:1 but probably still using a bit more power than needed vs just keeping it at stock.

I hope so.

Some reviews I read had one up at ~2930 pulling about 430w, so I feel like ought to be able to at least match that under water, but I don't have a good feel for how power limited these are yet, or if the limit lies elsewhere.

Zero regrets buying mine also. It's an awesome card.

Unless you're racing benchmarks, ~400W is probably the efficient limit. I'll note that even my 3080 12GB, not even a Ti card, has 3x8pin power and can pull 450W with power limits raised. It's still never going to outrun a 3090.

I'm starting to wonder if I am ever going to get this 4090.

My UPS tracking number was created on Tuesday (01/03) and it is still sitting in "label created" status and listed as "on the way" with delivery due on Monday, but no detailed steps in the progress since then :/

I have Paypal buyer protection on it, so I know one way or another I'm not going to be out any money, but I have an EKWB water block on the slow boat from Slowvenia. If this 4090 winds up resulting in a refund, no guarantees the next one I find is the same model that fits the same waterblock :/ International waterblock returns are likely not happening. Probably can sell it, but still, that would be a pain in the ***.

Let's hope it's one of those borked UPS things. I've seen some occasions with them and USPS/FedEx(a lot with both of these) where tracking doesn't get updated for one reason or another until the day of delivery, and sometimes not even then until after it's delivered. Fingers crossed for ya!

Was having the issue where the label was created but sat for days without any sign of pick up or anything then all of a sudden it was out for delivery. I think this time of year really messes with the tracking system. I had my Maximus motherboard's tracking do the same thing recently.

Yeah, I've had those too, but I've also had ones where they just disappeared en route. Fingers crossed!

Aaahhh, so you decided to move on from the 6900 XT already huh?

I know you game at 4K, but I feel like a 4090 should last you decently longer than that.

Alright, with that out of the way, I got a surprise knock at the door today. It was UPS.

As @Niner51 suggested, without any tracking history it showed up at my door, two days earlier than predicted at that, so of course I had to play with it a little.

I don't have my water block yet, so I am not sticking it in my main rig, but this is what I ahve a testbench machine for, to test new hardware offline before I take the server or desktop down for an upgrade. And I definitely want to test and make sure the GPU is working properly before I take the cooler off and install the water block when it arrives.

I just noticed I didn't take any pictures of the GPU out of the system. I will have to remedy that when the waterblock arrives.

Anyway, pictures do not do the size of these 4090's justice. This thing is HUGE.

The Phantecs Enthoo Pro my test bench machine resides in is a large case, and it still wasnt entirely straight forward to get it in.

I had to remove the drive bays, and slide the GPU in at an angle into the space they used to sit in, then press it into the slot.

So, I bet this is one of the more unusual CPU/GPU combinations that is now in the TimeSpy Database: